Your AI-powered app might already be illegal in the EU. That's not hyperbole.

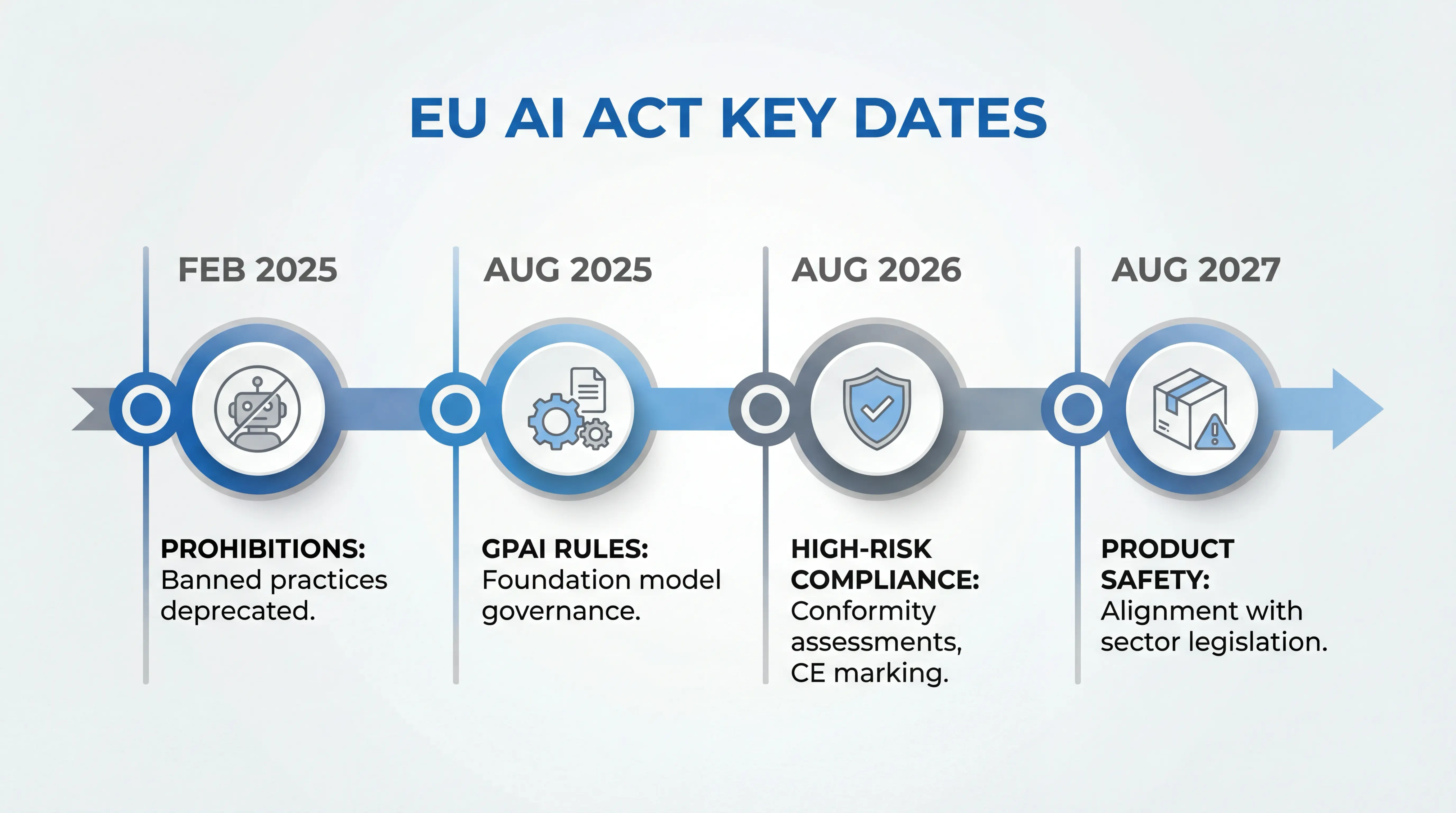

Since February 2025, specific AI practices have been outright banned in Europe. By August 2026, apps falling into "high-risk" categories will need conformity assessments, quality management systems, and CE markings. If you're building apps that use machine learning, recommendation engines, or integrate with foundation models like GPT-4 or Claude, this affects you.

The EU AI Act is the world's first comprehensive legal framework for artificial intelligence. Think of it as GDPR for AI, but more technically complex. It applies to any provider placing AI systems on the EU market, regardless of where they're based. Building apps in Manchester for EU users? You're in scope.

We recently audited an AI-enabled communications platform targeting healthcare and care home sectors. The findings were sobering: the platform qualified as high-risk through three separate pathways (healthcare services, workforce management, and content moderation), yet its compliance readiness was approximately 15%. The most critical gap? Complete absence of AI operation logging.

Article 5 of the Act establishes outright bans. These aren't "comply or pay a fine" situations. These features must be removed from production immediately.

The Act prohibits AI systems that use subliminal techniques or manipulative methods to distort user behaviour in ways that cause harm.

What does this mean practically? Those notification delivery algorithms optimised to target users when they're most vulnerable. Push notification timing that exploits sleep-deprived states to induce purchases. "Dark pattern" UX that AI has optimised to maximise engagement or retention by exploiting psychological weaknesses.

If your recommendation engine's objective function implicitly optimises for vulnerability exploitation, you have a problem.

AI-powered emotion detection is banned in two specific contexts: the workplace and educational institutions.

That "student engagement tracker" using facial analysis? Illegal. HR interviewing tools that analyse candidate "sentiment" or "nervousness" from video feeds? Also illegal.

This doesn't mean emotion recognition is banned everywhere. Driver safety monitoring and medical diagnosis (detecting pain in patients) remain legal. But if you're building a "Zoom for Education" competitor with attention monitoring, you need to rethink that feature.

Using AI to infer race, political opinions, religious beliefs, or sexual orientation from biometric data is prohibited.

Any system that generates a "trustworthiness score" by aggregating disparate data sources (credit, social media, behaviour patterns) leading to unfavourable treatment is banned. If your app architecture resembles China's social credit system, even unintentionally, you're on the wrong side of the law.

Systems assessing crime risk based solely on profiling are prohibited. This one primarily affects public sector applications, but the principle extends to any "risk assessment" based purely on demographic or behavioural profiles.

Your app is high-risk if it uses AI for education evaluation, employment task allocation, credit scoring, critical infrastructure management, or biometric identification. Annex III of the Act lists these specific use cases, and regardless of your underlying technology's complexity, falling into these categories triggers conformity assessments, quality management systems, and CE marking requirements by August 2026.

If your app falls into these categories, you'll need conformity assessments, quality management systems, CE marking, and registration in the EU database by August 2026.

Education and Vocational Training

Systems that determine access to education, assign students to institutions, or evaluate learning outcomes are high-risk. That "AI Tutor" app adapting curriculum based on student performance? If its output formally evaluates outcomes or steers the learning process, it's probably high-risk.

Employment and Workers Management

Recruitment tools (CV parsing, candidate ranking) and systems for task allocation or performance monitoring fall here. This is the big one for gig economy apps. If your algorithm assigns rides, deliveries, or tasks to workers, you're operating a high-risk system because it determines "access to self-employment."

Credit and Insurance

AI-powered credit scoring (except for fraud detection) and life/health insurance risk assessment are high-risk. Your "Buy Now, Pay Later" app using AI to determine credit limits? High-risk.

Critical Infrastructure

AI components managing road traffic, water, gas, heating, or electricity supply. If you're building apps that optimise HVAC systems in commercial buildings for energy efficiency, think carefully. If a failure could disrupt heating supply, you're likely high-risk.

Biometrics

Remote biometric identification (even post-event) and biometric categorisation trigger high-risk status.

In our recent audit of a healthcare communications app, the client was surprised to discover their platform triggered high-risk classification through multiple routes. Their RAG-based Q&A feature? High-risk because it operates in healthcare settings and may influence care decisions. Their engagement analytics? High-risk under the workforce management provisions. Their planned AI content moderation? High-risk due to fundamental rights implications in safeguarding contexts. One platform, three compliance pathways.

A system listed in Annex III isn't automatically high-risk if it doesn't pose a "significant risk of harm." The derogation applies when your AI:

There's a catch. If your system performs profiling of natural persons, it's always high-risk. No exceptions.

Strategic Implication: You can de-risk a product by narrowing its scope. Instead of "Automated Recruitment Screening Tool" (high-risk), position it as a "Keyword Highlighting Tool for Recruiters" (potentially lower risk). But this requires your "intended purpose" documentation to match what the system actually does.

For high-risk systems, compliance isn't a checkbox exercise. It's architectural.

Training datasets must be "relevant, sufficiently representative, and to the best extent possible, free of errors and complete."

Nobody has error-free datasets at scale. The regulators know this. What they're looking for is a rigorous process of error detection and correction. Automated data quality checks (using tools like Great Expectations) validating schema, distribution, and null values. The logs of these checks become your compliance evidence.

You must examine datasets for biases affecting health, safety, or fundamental rights. The Act uniquely allows processing of special category data (race, ethnicity) solely for bias monitoring, provided you implement strict security measures and immediately delete the data afterward. This means secure "clean room" environments where demographic data joins training data temporarily to calculate fairness metrics before being discarded.

High-risk systems must automatically generate logs enabling traceability. Mandatory attributes include:

For high-traffic LLM applications, this generates massive storage volumes. And logging inputs might capture PII, conflicting with GDPR's data minimisation principle.

Practical Solutions:

When we audited a client's AI-enabled platform recently, logging was the most significant gap. They had basic Firebase Analytics for telemetry, but zero compliance-grade logging: no AI decision logs, no input data recording, no model version tracking, no human override logging. For their RAG system specifically, there was no record of which documents were retrieved, what confidence scores drove ranking decisions, or which prompt template versions were in use. Rebuilding this from scratch added significant development time to their compliance roadmap.

Compliance-grade logging typically represents 15-25% of the total AI implementation effort. Most apps we audit have basic analytics (Firebase, Mixpanel) but zero compliance-ready logging for AI operations. Retrofitting this is significantly more expensive than building it in from the start.

Standard security (firewalls, encryption) isn't enough. The Act requires protection against AI-specific vulnerabilities:

Data Poisoning: Attacks manipulating training data to introduce backdoors

Adversarial Attacks: Inputs designed to trick the model (adding noise to misclassify images)

Model Extraction: API abuse to reverse-engineer model weights

Prompt Injection: Manipulating LLM behaviour through malicious inputs

Fallback mechanisms are mandatory. If AI confidence drops below threshold, the system must fail over to heuristic rules or human escalation. Not hallucinate.

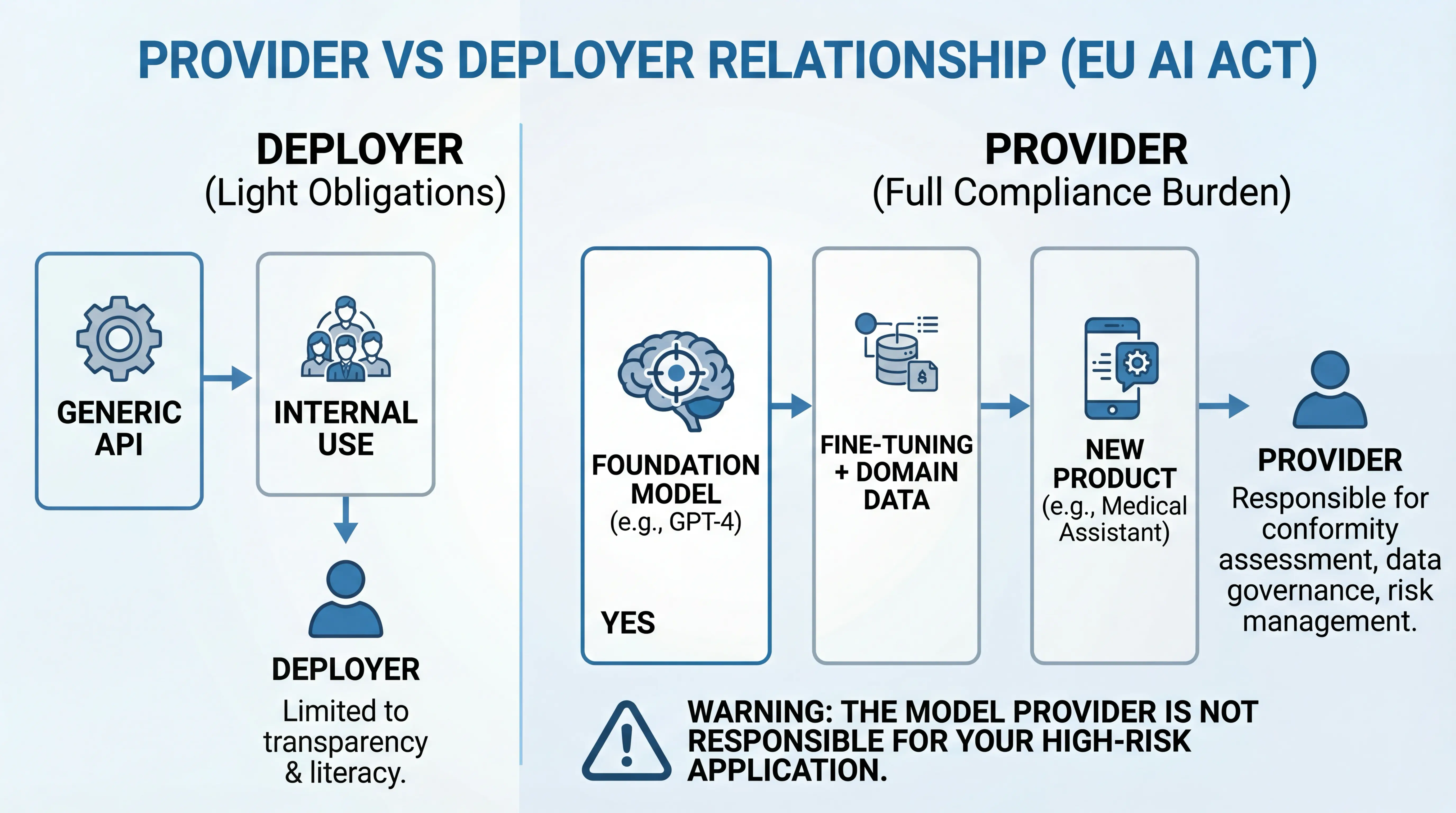

Most modern apps are wrappers around third-party APIs. OpenAI, Google Vertex AI, Azure AI, Anthropic's Claude. The EU AI Act makes this relationship complicated.

Deployer: You use a generic "Sentiment Analysis API" to sort customer feedback internally. Your obligations are limited (transparency and literacy).

Provider: You take a foundation model, fine-tune it on medical data, and release "Dr. AI" as a medical diagnosis assistant.

In the second scenario, you're the Provider of a High-Risk AI System. OpenAI or Anthropic isn't the provider of your specific medical system. You are. You're fully responsible for conformity assessment, data governance, and risk management of the entire system, including the base model you don't control.

We've seen this scenario play out with a client's white-label platform. They provided a generic AI communications tool that healthcare organisations could rebrand. Under Article 25, those deployers risked becoming providers simply by putting their own name on the system. The liability implications weren't in anyone's contracts. We now advise explicitly addressing this in terms of service.

If you become a Provider based on a third-party model, you face a "compliance gap." You need technical details about the base model (training data, validation results) to complete your conformity assessment. The API provider might consider this a trade secret.

Article 53 of the Act obliges GPAI providers to supply technical documentation enabling downstream providers to comply. Your contracts with API providers should explicitly reference Article 53 cooperation obligations. If a provider refuses to supply necessary compliance data, you cannot legally build a high-risk system on their platform.

Compliance with the EU AI Act isn't something you implement once. It requires ongoing habits.

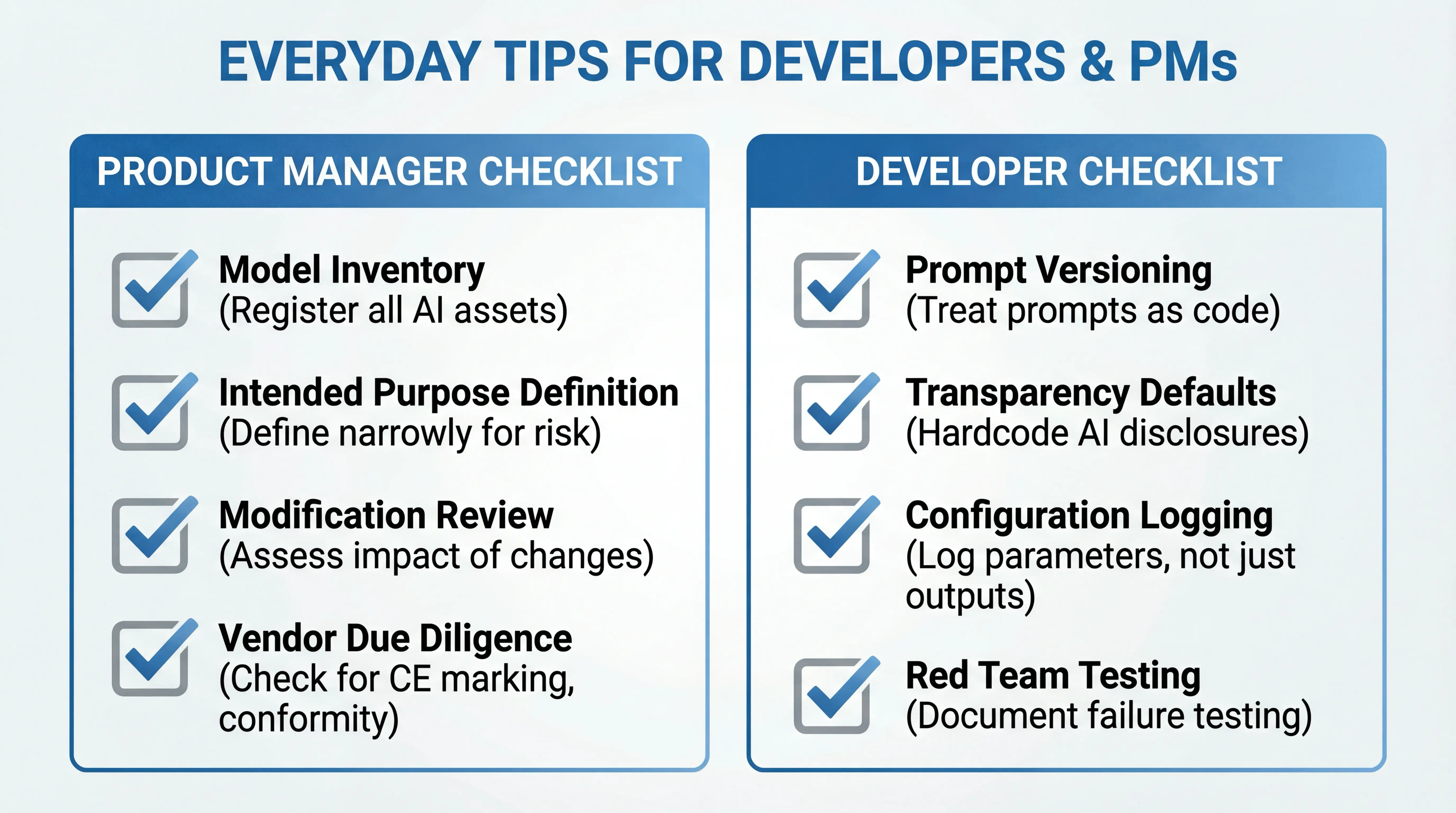

Define "Intended Purpose" narrowly. The legal definition of your product's purpose determines its risk class. Avoid marketing language like "AI that assesses potential" if the tool simply matches keywords. Precise language prevents regulatory headaches.

Maintain a Model Inventory. Know every place AI is used in your product, its risk classification, and its data sources. Treat this like an asset register.

Check for Substantial Modification. Before approving major model updates or prompt engineering changes that alter system behaviour, verify whether it triggers re-assessment of compliance.

Vendor Due Diligence. When buying AI tools, ask: "Is this CE marked? Where's your Declaration of Conformity?"

Treat Prompts as Code. Prompts define system behaviour. Version control them (Git), review them, test them. They're not throwaway strings.

"I am a Robot" by Default. Hardcode transparency disclosures. Every conversation start or generated image should carry disclosure metadata. Article 50 requires users to know they're interacting with AI.

Log the "Why." Don't just log outputs. Log configuration parameters (temperature, top_p) and prompt template versions. You need to explain why the system produced a specific output.

Red Team Your Own Features. Don't just test if it works. Test how it fails. Try to make your chatbot produce harmful outputs. Try to bias your classifier. Document these tests. They're your "risk management" evidence.

Use Standard Libraries. Leverage open-source compliance tools. C2PA libraries for watermarking images. Fairness toolkits (e.g. Fairlearn) for bias detection. Don't reinvent these wheels.

The Act is being enforced in phases:

The European Commission's November 2025 "Digital Omnibus" proposal introduces flexibility. If harmonised standards aren't finalised, high-risk system deadlines could slide 6-12 months. A "long-stop" date of December 2027 ensures eventual enforcement regardless.

Don't rely on delays. Architect for August 2026. Use any extension for rigorous testing, not deferred development.

Here's the thing. The EU AI Act isn't just regulatory burden. It's becoming a quality standard.

Just as GDPR became the de facto global privacy benchmark, "EU AI Act compliant" is emerging as a trust signal. Customers in the US, Asia, and elsewhere increasingly expect the same standards. Building for EU compliance isn't a regional cost. It's a global quality investment.

Companies that implement rigorous risk management, bias testing, and transparency now will be audit-ready while competitors scramble. The "Compliance-as-a-Service" middleware market is emerging (automated bias checking, secure logging vaults, hallucination firewalls). Early adopters will have smoother paths to market.

The regulatory environment rewards organisations that treat "Trustworthy AI" as a feature, not a constraint.

If you're building apps with AI capabilities for EU users, start here:

The August 2026 deadline for high-risk systems isn't far away. Eighteen months sounds comfortable until you factor in development cycles, testing, and conformity assessments.

From the compliance audits we've conducted, most AI-powered apps are operating at roughly 15% readiness for the August 2026 deadline. The gap isn't awareness. It's implementation. Logging infrastructure, documentation, and conformity assessment preparation take longer than teams expect.

The EU AI Act represents a significant shift in how AI-powered apps must be designed, documented, and operated. If you're unsure how your current or planned mobile app fits within these regulations, or you need help architecting compliant systems from the ground up, we're here to help.

At Foresight Mobile, we build AI-integrated mobile applications with compliance considered from day one. Whether you're concerned about risk classification, logging requirements, or third-party API dependencies, get in touch to discuss your specific situation.

We offer a structured discovery process through our App Gameplan service. In four weeks, you'll know exactly what to build, what compliance considerations apply, and how to move forward with confidence.

Sources: